群智能算法:三个Python实现

Imagine watching a flock of birds in flight. There's no leader, no one giving directions, yet they swoop and glide together in perfect harmony. It may look like chaos, but there's a hidden order. You can see the same pattern in schools of fish avoiding predators or ants finding the shortest path to food. These creatures rely on simple rules and local communication to tackle surprisingly complex tasks without central control.

That’s the magic of swarm intelligence.

We can replicate this behavior using algorithms that solve tough problems by mimicking swarm intelligence.

Particle Swarm Optimization (PSO)

Particle swarm optimization (PSO) draws its inspiration from the behavior of flocks of birds and schools of fish. In these natural systems, individuals move based on their own previous experiences and their neighbors' positions, gradually adjusting to follow the most successful members of the group. PSO applies this concept to optimization problems, where particles, called agents, move through the search space to find an optimal solution.

Compared to ACO, PSO operates in continuous rather than discrete spaces. In ACO, the focus is on pathfinding and discrete choices, while PSO is better suited for problems involving continuous variables, such as parameter tuning.

In PSO, particles explore a search space. They adjust their positions based on two main factors: their personal best-known position and the best-known position of the entire swarm. This dual feedback mechanism enables them to converge toward the global optimum.

How particle swarm optimization works

The process starts with a swarm of particles initialized randomly across the solution space. Each particle represents a possible solution to the optimization problem. As the particles move, they remember their personal best positions (the best solution they’ve encountered so far) and are attracted toward the global best position (the best solution any particle has found).

This movement is driven by two factors: exploitation and exploration. Exploitation involves refining the search around the current best solution, while exploration encourages particles to search other parts of the solution space to avoid getting stuck in local optima. By balancing these two dynamics, PSO efficiently converges on the best solution.

Particle swarm optimization Python implementation

In financial portfolio management, finding the best way to allocate assets to get the most returns while keeping risks low can be tricky. Let’s use a PSO to find which mix of assets will give us the highest return on investment.

The code below shows how PSO works for optimizing a fictional financial portfolio. It starts with random asset allocations, then tweaks them over several iterations based on what works best, gradually finding the optimal mix of assets for the highest return with the lowest risk.

import numpy as np

import matplotlib.pyplot as plt

# Define the PSO parameters

class Particle:

def __init__(self, n_assets):

# Initialize a particle with random weights and velocities

self.position = np.random.rand(n_assets)

self.position /= np.sum(self.position) # Normalize weights so they sum to 1

self.velocity = np.random.rand(n_assets)

self.best_position = np.copy(self.position)

self.best_score = float('inf') # Start with a very high score

def objective_function(weights, returns, covariance):

"""

Calculate the portfolio's performance.

- weights: Asset weights in the portfolio.

- returns: Expected returns of the assets.

- covariance: Covariance matrix representing risk.

"""

portfolio_return = np.dot(weights, returns) # Calculate the portfolio return

portfolio_risk = np.sqrt(np.dot(weights.T, np.dot(covariance, weights))) # Calculate portfolio risk (standard deviation)

return -portfolio_return / portfolio_risk # We want to maximize return and minimize risk

def update_particles(particles, global_best_position, returns, covariance, w, c1, c2):

"""

Update the position and velocity of each particle.

- particles: List of particle objects.

- global_best_position: Best position found by all particles.

- returns: Expected returns of the assets.

- covariance: Covariance matrix representing risk.

- w: Inertia weight to control particle's previous velocity effect.

- c1: Cognitive coefficient to pull particles towards their own best position.

- c2: Social coefficient to pull particles towards the global best position.

"""

for particle in particles:

# Random coefficients for velocity update

r1, r2 = np.random.rand(len(particle.position)), np.random.rand(len(particle.position))

# Update velocity

particle.velocity = (w * particle.velocity

c1 * r1 * (particle.best_position - particle.position)

c2 * r2 * (global_best_position - particle.position))

# Update position

particle.position = particle.velocity

particle.position = np.clip(particle.position, 0, 1) # Ensure weights are between 0 and 1

particle.position /= np.sum(particle.position) # Normalize weights to sum to 1

# Evaluate the new position

score = objective_function(particle.position, returns, covariance)

if score

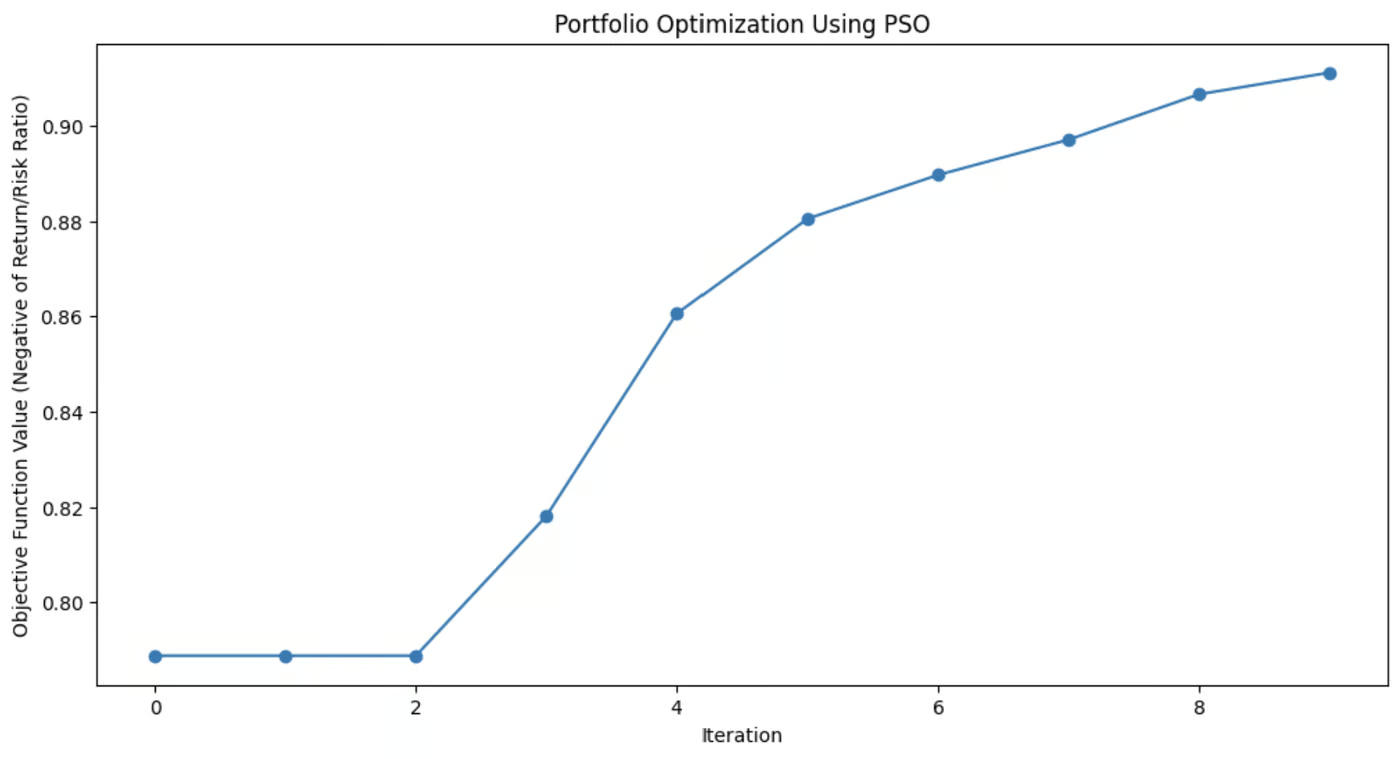

This graph demonstrates how much the PSO algorithm improved the portfolio’s asset mix with each iteration.

Applications of particle swarm optimization

PSO is used for its simplicity and effectiveness in solving various optimization problems, particularly in continuous domains. Its flexibility makes it useful for many real-world scenarios where precise solutions are needed.

These applications include:

- Machine learning: PSO can be applied to tune hyperparameters in machine learning algorithms, helping to find the best model configurations.

- Engineering design: PSO is useful for optimizing design parameters for systems like aerospace components or electrical circuits.

- Financial modeling: In finance, PSO can help in portfolio optimization, minimizing risk while maximizing returns.

PSO's ability to efficiently explore solution spaces makes it applicable across fields, from robotics to energy management to logistics.

Artificial Bee Colony (ABC)

The artificial bee colony (ABC) algorithm is modeled on the foraging behavior of honeybees.

In nature, honeybees efficiently search for nectar sources and share this information with other members of the hive. ABC captures this collaborative search process and applies it to optimization problems, especially those involving complex, high-dimensional spaces.

What sets ABC apart from other swarm intelligence algorithms is its ability to balance exploitation, focusing on refining current solutions, and exploration, searching for new and potentially better solutions. This makes ABC particularly useful for large-scale problems where global optimization is key.

How artificial bee colony works

In the ABC algorithm, the swarm of bees is divided into three specialized roles: employed bees, onlookers, and scouts. Each of these roles mimics a different aspect of how bees search for and exploit food sources in nature.

- Employed bees: These bees are responsible for exploring known food sources, representing current solutions in the optimization problem. They assess the quality (fitness) of these sources and share the information with the rest of the hive.

- Onlooker bees: After gathering information from the employed bees, onlookers select which food sources to explore further. They base their choices on the quality of the solutions shared by the employed bees, focusing more on the better options, thus refining the search for an optimal solution.

- Scout bees: When an employed bee’s food source (solution) becomes exhausted or stagnant (when no improvement is found after a certain number of iterations), the bee becomes a scout. Scouts explore new areas of the solution space, searching for potentially unexplored food sources, thus injecting diversity into the search process.

This dynamic allows ABC to balance the search between intensively exploring promising areas and broadly exploring new areas of the search space. This helps the algorithm avoid getting trapped in local optima and increases its chances of finding a global optimum.

Artificial bee colony Python implementation

The Rastrigin function is a popular problem in optimization, known for its numerous local minima, making it a tough challenge for many algorithms. The goal is simple: find the global minimum.

In this example, we’ll use the artificial bee colony algorithm to tackle this problem. Each bee in the ABC algorithm explores the search space, looking for better solutions to minimize the function. The code simulates bees that explore, exploit, and scout for new areas, ensuring a balance between exploration and exploitation.

import numpy as np

import matplotlib.pyplot as plt

# Rastrigin function: The objective is to minimize this function

def rastrigin(X):

A = 10

return A * len(X) sum([(x ** 2 - A * np.cos(2 * np.pi * x)) for x in X])

# Artificial Bee Colony (ABC) algorithm for continuous optimization of Rastrigin function

def artificial_bee_colony_rastrigin(n_iter=100, n_bees=30, dim=2, bound=(-5.12, 5.12)):

"""

Apply Artificial Bee Colony (ABC) algorithm to minimize the Rastrigin function.

Parameters:

n_iter (int): Number of iterations

n_bees (int): Number of bees in the population

dim (int): Number of dimensions (variables)

bound (tuple): Bounds for the search space (min, max)

Returns:

tuple: Best solution found, best fitness value, and list of best fitness values per iteration

"""

# Initialize the bee population with random solutions within the given bounds

bees = np.random.uniform(bound[0], bound[1], (n_bees, dim))

best_bee = bees[0]

best_fitness = rastrigin(best_bee)

best_fitnesses = []

for iteration in range(n_iter):

# Employed bees phase: Explore new solutions based on the current bees

for i in range(n_bees):

# Generate a new candidate solution by perturbing the current bee's position

new_bee = bees[i] np.random.uniform(-1, 1, dim)

new_bee = np.clip(new_bee, bound[0], bound[1]) # Keep within bounds

# Evaluate the fitness of the new solution

new_fitness = rastrigin(new_bee)

if new_fitness

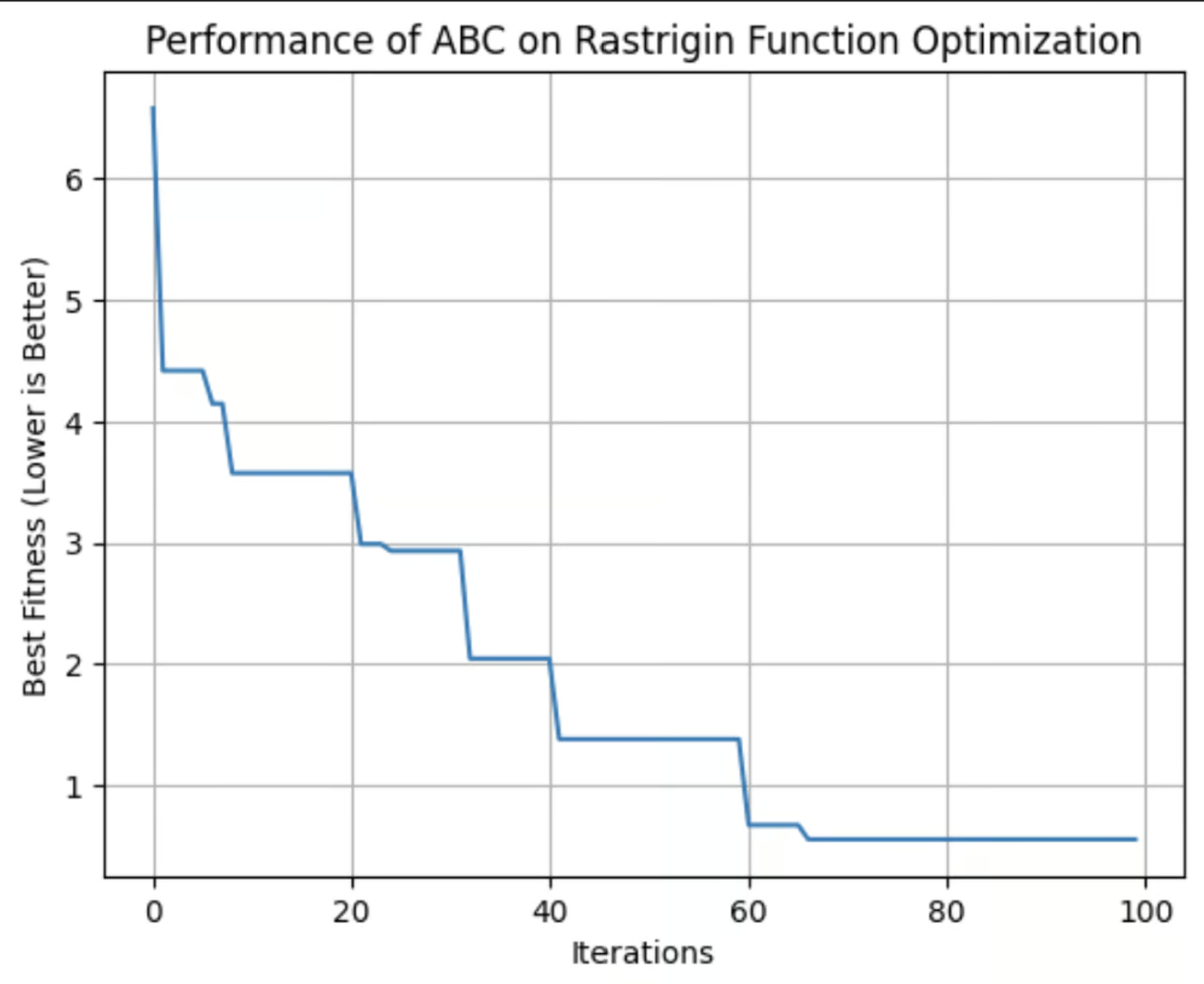

This graph shows the fitness of the best solution found by the ABC algorithm with each iteration. In this run, it reached its optimum fitness around the 64th iteration.

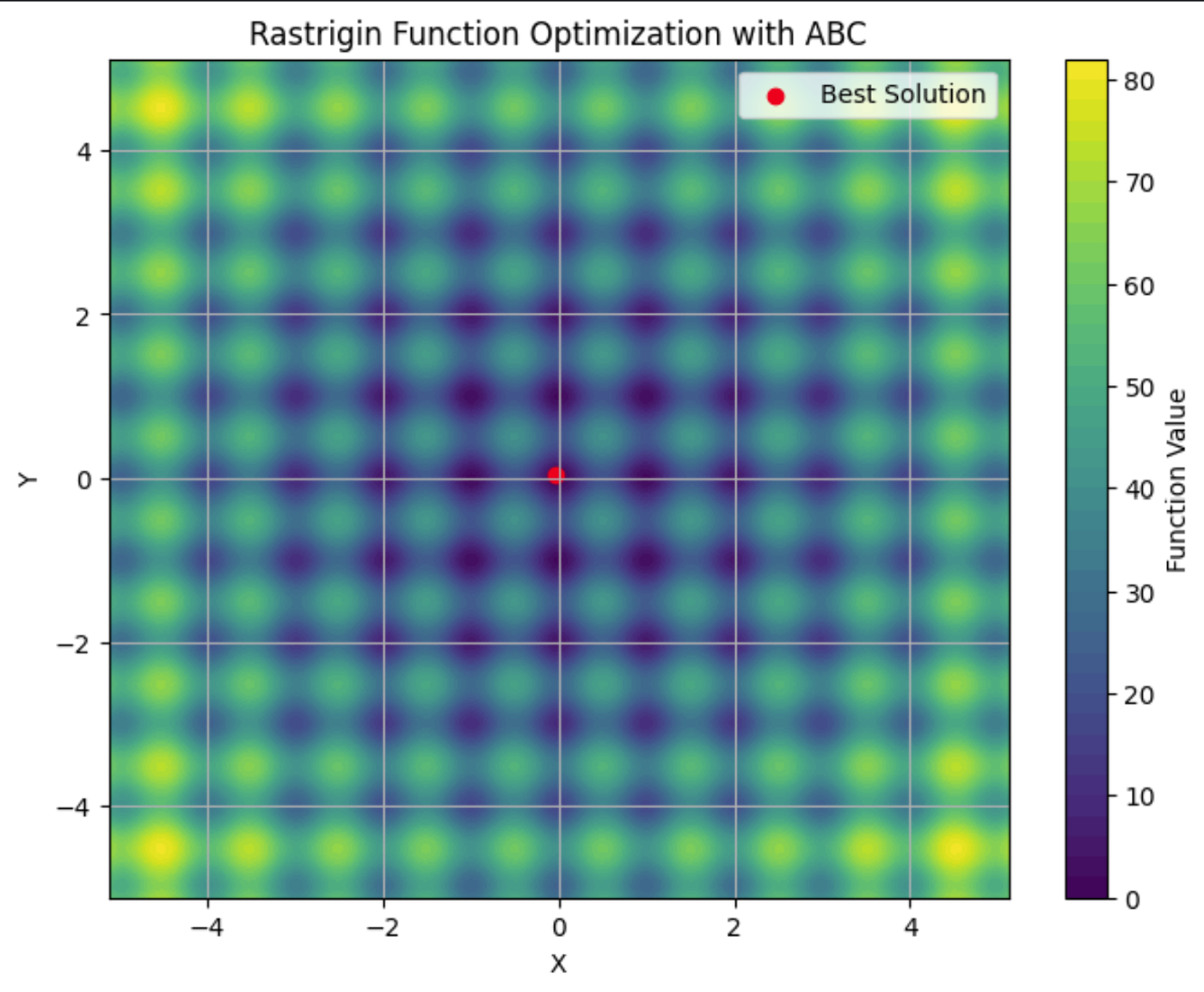

Here you can see the Rastrigin function plotted on a contour plot, with its many local minima. The red dot is the global minima found by the ABC algorithm we ran.

Applications of artificial bee colony

The ABC algorithm is a robust tool for solving optimization problems. Its ability to efficiently explore large and complex search spaces makes it a go-to choice for industries where adaptability and scalability are critical.

These applications include:

- Telecommunications: ABC can be used to optimize the placement of network resources and antennas, maximizing coverage and signal strength while minimizing costs.

- Engineering: ABC can fine-tune parameters in structural design optimization.

- Data Science: ABC can be applied to feature selection, to identify the most important variables in a dataset for machine learning.

ABC is a flexible algorithm suitable for any problem where optimal solutions need to be found in dynamic, high-dimensional environments. Its decentralized nature makes it well-suited for situations where other algorithms may struggle to balance exploration and exploitation efficiently.

Comparing Swarm Intelligence Algorithms

There are multiple swarm intelligence algorithms, each with different attributes. When deciding which to use, it's important to weigh their strengths and weaknesses to decide which best suits your needs.

ACO is effective for combinatorial problems like routing and scheduling but may need significant computational resources. PSO is simpler and excels in continuous optimization, such as hyperparameter tuning, but can struggle with local optima. ABC successfully balances exploration and exploitation, though it requires careful tuning.

Other swarm intelligence algorithms, such as Firefly Algorithm and Cuckoo Search Optimization, also offer unique advantages for specific types of optimization problems.

|

Algorithm |

Strengths |

Weaknesses |

Preferred Libraries |

Best Applications |

|

Ant Colony Optimization (ACO) |

Effective for combinatorial problems and handles complex discrete spaces well |

Computationally intensive and requires fine-tuning |

pyaco |

Routing problems, scheduling, and resource allocation |

|

Particle Swarm Optimization (PSO) |

Good for continuous optimization and simple and easy to implement |

Can converge to local optima and is less effective for discrete problems |

pyswarms |

Hyperparameter tuning, engineering design, financial modeling |

|

Artificial Bee Colony (ABC) |

Adaptable to large, dynamic problems and balanced exploration and exploitation |

Computationally intensive and requires careful parameter tuning |

beecolpy |

Telecommunications, large-scale optimization, and high-dimensional spaces |

|

Firefly Algorithm (FA) |

Excels in multimodal optimization and has strong global search ability |

Sensitive to parameter settings and slower convergence |

fireflyalgorithm |

Image processing, engineering design, and multimodal optimization |

|

Cuckoo Search (CS) |

Efficient for solving optimization problems and has strong exploration capabilities |

May converge prematurely and performance depends on tuning |

cso |

Scheduling, feature selection, and engineering applications |

Challenges and Limitations

Swarm intelligence algorithms, like many machine learning techniques, encounter challenges that can affect their performance. These include:

- Premature convergence: The swarm may settle on a suboptimal solution too quickly.

- Parameter tuning: Achieving optimal results often requires careful adjustment of algorithm settings.

- Computational resources & scalability: These algorithms can be computationally intensive, especially with larger, more complex problems, and their performance might degrade as problem complexity increases.

- Stochastic nature: The inherent randomness in these algorithms can lead to variability in results.

Latest Research and Advancements

A notable trend is the integration of swarm intelligence with other machine learning techniques. Researchers are exploring how swarm algorithms can enhance tasks such as feature selection and hyperparameter optimization. Check out A hybrid particle swarm optimization algorithm for solving engineering problem.

Recent advancements also focus on addressing some of the traditional challenges associated with swarm intelligence, such as premature convergence. New algorithms and techniques are being developed to mitigate the risk of converging on suboptimal solutions. For more information, check out Memory-based approaches for eliminating premature convergence in particle swarm optimization

Scalability is another significant area of research. As problems become increasingly complex and data volumes grow, researchers are working on ways to make swarm intelligence algorithms more scalable and efficient. This includes developing algorithms that can handle large datasets and high-dimensional spaces more effectively, while optimizing computational resources to reduce the time and cost associated with running these algorithms. For more on this, check out Recent Developments in the Theory and Applicability of Swarm Search.

Swarm algorithms are being applied to problems from robotics, to large language models (LLMs), to medical diagnosis. There is ongoing research into whether these algorithms can be useful for helping LLMs strategically forget information to comply with Right to Be Forgotten regulations. And, of course, swarm algorithms have a multitude of applications in data science.

Conclusion

Swarm intelligence offers powerful solutions for optimization problems across various industries. Its principles of decentralization, positive feedback, and adaptation allow it to tackle complex, dynamic tasks that traditional algorithms might struggle with.

Check out this review of the current state of swarm algorithms, “Swarm intelligence: A survey of model classification and applications”.

For a deeper dive into the business applications of AI, check out Artificial Intelligence (AI) Strategy or Artificial Intelligence for Business Leaders. To learn about other algorithms that imitate nature, check out Genetic Algorithm: Complete Guide With Python Implementation.

-

AI Agents是什么?- 解析与应用指南Artificial Intelligence (AI) is rapidly evolving, and 2025 is shaping up to be the year of AI agents. But what are AI agents...人工智能 发布于2025-05-01

AI Agents是什么?- 解析与应用指南Artificial Intelligence (AI) is rapidly evolving, and 2025 is shaping up to be the year of AI agents. But what are AI agents...人工智能 发布于2025-05-01 -

Python中使用OpenCV和Roboflow进行性别检测 - 分析Vidhya介绍 从面部图像中检测是计算机视觉的众多迷人应用之一。在此项目中,我们将OPENCV结合起来,以面对位置和用于性别分类的Roboflow API,制作一种识别面部的设备,检查它们并预测其性别。我们将利用Python(尤其是在Google Colab中)输入和运行此代码。该直接提供了易于遵循的代码的演...人工智能 发布于2025-04-29

Python中使用OpenCV和Roboflow进行性别检测 - 分析Vidhya介绍 从面部图像中检测是计算机视觉的众多迷人应用之一。在此项目中,我们将OPENCV结合起来,以面对位置和用于性别分类的Roboflow API,制作一种识别面部的设备,检查它们并预测其性别。我们将利用Python(尤其是在Google Colab中)输入和运行此代码。该直接提供了易于遵循的代码的演...人工智能 发布于2025-04-29 -

机器先行思考:战略AI崛起STRATEGIC AI Prologue 11. May 1997, New York City. It was a beautiful spring day in New York City. The skies were clear, and temperatures were climbin...人工智能 发布于2025-04-29

机器先行思考:战略AI崛起STRATEGIC AI Prologue 11. May 1997, New York City. It was a beautiful spring day in New York City. The skies were clear, and temperatures were climbin...人工智能 发布于2025-04-29 -

LLM必备的8个免费与付费API推荐利用LLMS的力量:大型语言模型的API指南 在当今的动态业务格局中,API(应用程序编程接口)正在革新我们如何整合和利用AI功能。 它们充当必不可少的桥梁,无缝将大型语言模型(LLM)连接到不同的软件生态系统。 这种有效的数据交换和功能共享允许应用程序充分利用开放和封闭源LLM的功率。本文探讨...人工智能 发布于2025-04-21

LLM必备的8个免费与付费API推荐利用LLMS的力量:大型语言模型的API指南 在当今的动态业务格局中,API(应用程序编程接口)正在革新我们如何整合和利用AI功能。 它们充当必不可少的桥梁,无缝将大型语言模型(LLM)连接到不同的软件生态系统。 这种有效的数据交换和功能共享允许应用程序充分利用开放和封闭源LLM的功率。本文探讨...人工智能 发布于2025-04-21 -

使用指南:Falcon 3-7B Instruct模型中的革命性飞跃 关键功能和改进 可扩展的模型大小:有各种尺寸(1b,3b,7b和10b parameters),为各种应用程序提供了灵活性。 高级文本生成:文本生成中的异常功能,包括细微的上下文理解和特定于任务的应用程序。 本文是数据科学博客马拉松的一部分。 目录 架构设计 性能基准 [2 [2 使...人工智能 发布于2025-04-20

使用指南:Falcon 3-7B Instruct模型中的革命性飞跃 关键功能和改进 可扩展的模型大小:有各种尺寸(1b,3b,7b和10b parameters),为各种应用程序提供了灵活性。 高级文本生成:文本生成中的异常功能,包括细微的上下文理解和特定于任务的应用程序。 本文是数据科学博客马拉松的一部分。 目录 架构设计 性能基准 [2 [2 使...人工智能 发布于2025-04-20 -

DeepSeek-V3对比GPT-4o与Llama 3.3 70B:最强AI模型揭秘The evolution of AI language models has set new standards, especially in the coding and programming landscape. Leading the c...人工智能 发布于2025-04-18

DeepSeek-V3对比GPT-4o与Llama 3.3 70B:最强AI模型揭秘The evolution of AI language models has set new standards, especially in the coding and programming landscape. Leading the c...人工智能 发布于2025-04-18 -

盘点Top 5 AI智能预算工具通过AI解锁财务自由:印度的最高预算应用程序 您是否厌倦了不断想知道您的钱去了哪里? 账单似乎会吞噬您的收入吗? 人工智能(AI)提供了强大的解决方案。 AI预算工具提供实时财务见解,个性化建议和动态计划,使资金管理更简单,更准确。 有些甚至使用生成AI提供基于聊天的财务分析!本文探讨了印度可...人工智能 发布于2025-04-17

盘点Top 5 AI智能预算工具通过AI解锁财务自由:印度的最高预算应用程序 您是否厌倦了不断想知道您的钱去了哪里? 账单似乎会吞噬您的收入吗? 人工智能(AI)提供了强大的解决方案。 AI预算工具提供实时财务见解,个性化建议和动态计划,使资金管理更简单,更准确。 有些甚至使用生成AI提供基于聊天的财务分析!本文探讨了印度可...人工智能 发布于2025-04-17 -

Excel SUMPRODUCT函数详解 - 数据分析学院Excel的SumProduct函数:数据分析PowerHouse 解锁Excel的Sumproduct函数的功能,以用于简化数据分析。这种多功能功能毫不费力地结合了求和功能,扩展到跨相应范围或数组的加法,减法和分裂。 无论您是分析趋势还是解决复杂的计算,Sumproduct都会将数字转换为可...人工智能 发布于2025-04-16

Excel SUMPRODUCT函数详解 - 数据分析学院Excel的SumProduct函数:数据分析PowerHouse 解锁Excel的Sumproduct函数的功能,以用于简化数据分析。这种多功能功能毫不费力地结合了求和功能,扩展到跨相应范围或数组的加法,减法和分裂。 无论您是分析趋势还是解决复杂的计算,Sumproduct都会将数字转换为可...人工智能 发布于2025-04-16 -

深度研究全面开放,ChatGPT Plus用户福利Openai的深入研究:改变游戏的AI研究 Openai已为所有Chatgpt加上订户释放了深入的研究,并承诺在研究效率方面具有重大提高。 在测试了双子座,Grok 3和困惑等竞争对手的类似功能之后,我可以自信地将Openai的深入研究宣布为出色的选择。此博客深入研究了它的功能。 目录 什么是...人工智能 发布于2025-04-16

深度研究全面开放,ChatGPT Plus用户福利Openai的深入研究:改变游戏的AI研究 Openai已为所有Chatgpt加上订户释放了深入的研究,并承诺在研究效率方面具有重大提高。 在测试了双子座,Grok 3和困惑等竞争对手的类似功能之后,我可以自信地将Openai的深入研究宣布为出色的选择。此博客深入研究了它的功能。 目录 什么是...人工智能 发布于2025-04-16 -

亚马逊Nova Today真实体验与评测 - Analytics Vidhya亚马逊最近的回复:Invent 2024活动展示了Nova,这是其最先进的基础模型套件,旨在彻底改变AI和内容创建。本文深入研究了Nova的架构,通过动手实例探索其功能,并检查基准结果。 我们将介绍功能,评论,基准和对AI应用程序的影响。 [2 此探索将涵盖Amazon Nova的功能,详细的评论...人工智能 发布于2025-04-16

亚马逊Nova Today真实体验与评测 - Analytics Vidhya亚马逊最近的回复:Invent 2024活动展示了Nova,这是其最先进的基础模型套件,旨在彻底改变AI和内容创建。本文深入研究了Nova的架构,通过动手实例探索其功能,并检查基准结果。 我们将介绍功能,评论,基准和对AI应用程序的影响。 [2 此探索将涵盖Amazon Nova的功能,详细的评论...人工智能 发布于2025-04-16 -

ChatGPT定时任务功能的5种使用方法Chatgpt的新计划任务:使用AI 自动化您的一天 Chatgpt最近引入了一个改变游戏规则的功能:计划的任务。 这允许用户自动化重复提示,即使在离线时,也可以在预定时间接收通知或响应。想象一下每天策划的新闻通讯,自动化工作时间表或及时的习惯提醒 - 所有这些都是由Chatgpt自动处理的。...人工智能 发布于2025-04-16

ChatGPT定时任务功能的5种使用方法Chatgpt的新计划任务:使用AI 自动化您的一天 Chatgpt最近引入了一个改变游戏规则的功能:计划的任务。 这允许用户自动化重复提示,即使在离线时,也可以在预定时间接收通知或响应。想象一下每天策划的新闻通讯,自动化工作时间表或及时的习惯提醒 - 所有这些都是由Chatgpt自动处理的。...人工智能 发布于2025-04-16 -

三款AI聊机器人对同一提示的反应,哪个最佳?这是我发现的。在精心策划且详细的提示中扮演着质量良好的提示,在输出的质量中扮演任何cathbot生产的质量。与所有工具一样,输出仅与使用该工具的人的技能一样好。 AI聊天机器人没有什么不同。 有了这种理解,我指示每个模型创建一个针对个人理财的基本指南。这种方法使我能够评估多个相互联系的主题(特别是...人工智能 发布于2025-04-15

三款AI聊机器人对同一提示的反应,哪个最佳?这是我发现的。在精心策划且详细的提示中扮演着质量良好的提示,在输出的质量中扮演任何cathbot生产的质量。与所有工具一样,输出仅与使用该工具的人的技能一样好。 AI聊天机器人没有什么不同。 有了这种理解,我指示每个模型创建一个针对个人理财的基本指南。这种方法使我能够评估多个相互联系的主题(特别是...人工智能 发布于2025-04-15 -

ChatGPT足矣,无需专用AI聊机在一个新的AI聊天机器人每天启动的世界中,决定哪一个是正确的“一个”。但是,以我的经验,chatgpt处理了我所丢下的几乎所有内容,而无需在平台之间切换,只需稍有及时的工程。 在许多实践应用程序中可能会让您感到惊讶。它的范围令人印象深刻,使用户可以生成代码段,草稿求职信,甚至翻译语言。这种多功能性...人工智能 发布于2025-04-14

ChatGPT足矣,无需专用AI聊机在一个新的AI聊天机器人每天启动的世界中,决定哪一个是正确的“一个”。但是,以我的经验,chatgpt处理了我所丢下的几乎所有内容,而无需在平台之间切换,只需稍有及时的工程。 在许多实践应用程序中可能会让您感到惊讶。它的范围令人印象深刻,使用户可以生成代码段,草稿求职信,甚至翻译语言。这种多功能性...人工智能 发布于2025-04-14 -

印度AI时刻:与中美在生成AI领域竞赛印度的AI抱负:2025 Update 与中国和美国在生成AI上进行了大量投资,印度正在加快自己的Genai计划。 不可否认的是,迫切需要迎合印度各种语言和文化景观的土著大语模型(LLM)和AI工具。 本文探讨了印度新兴的Genai生态系统,重点介绍了2025年工会预算,公司参与,技能开发计划...人工智能 发布于2025-04-13

印度AI时刻:与中美在生成AI领域竞赛印度的AI抱负:2025 Update 与中国和美国在生成AI上进行了大量投资,印度正在加快自己的Genai计划。 不可否认的是,迫切需要迎合印度各种语言和文化景观的土著大语模型(LLM)和AI工具。 本文探讨了印度新兴的Genai生态系统,重点介绍了2025年工会预算,公司参与,技能开发计划...人工智能 发布于2025-04-13 -

使用Airflow和Docker自动化CSV到PostgreSQL的导入本教程演示了使用Apache气流,Docker和PostgreSQL构建强大的数据管道,以使数据传输从CSV文件自动化到数据库。 我们将介绍有效工作流程管理的核心气流概念,例如DAG,任务和操作员。 该项目展示了创建可靠的数据管道,该数据管线读取CSV数据并将其写入PostgreSQL数据库。我们...人工智能 发布于2025-04-12

使用Airflow和Docker自动化CSV到PostgreSQL的导入本教程演示了使用Apache气流,Docker和PostgreSQL构建强大的数据管道,以使数据传输从CSV文件自动化到数据库。 我们将介绍有效工作流程管理的核心气流概念,例如DAG,任务和操作员。 该项目展示了创建可靠的数据管道,该数据管线读取CSV数据并将其写入PostgreSQL数据库。我们...人工智能 发布于2025-04-12

学习中文

- 1 走路用中文怎么说?走路中文发音,走路中文学习

- 2 坐飞机用中文怎么说?坐飞机中文发音,坐飞机中文学习

- 3 坐火车用中文怎么说?坐火车中文发音,坐火车中文学习

- 4 坐车用中文怎么说?坐车中文发音,坐车中文学习

- 5 开车用中文怎么说?开车中文发音,开车中文学习

- 6 游泳用中文怎么说?游泳中文发音,游泳中文学习

- 7 骑自行车用中文怎么说?骑自行车中文发音,骑自行车中文学习

- 8 你好用中文怎么说?你好中文发音,你好中文学习

- 9 谢谢用中文怎么说?谢谢中文发音,谢谢中文学习

- 10 How to say goodbye in Chinese? 再见Chinese pronunciation, 再见Chinese learning